Today some “tech luminaries” asked for a moratorium on large AI models. Kind of like that time that Szilard and a bunch of scientists asked the US Government not to use the atomic bombs they just helped to build. Three weeks later two bombs were dropped on Japan.

First of all, ignore the stupid longtermism-based [4] paper above. If you want to understand what kind of world we are about to enter with AI, I suggest learning some nuclear history. I understand if you feel this is hyperbolic, but the parallels are uncanny in that the same nuclear technology that could save us from climate change is also the same that could kill millions when repackaged onto an ICBM. The irresponsible way we went about testing nuclear technology is the reason we collectively fear anything nuclear. In its early history, nuclear science was carried out so nonchalantly that we still suffer the devastating effects from the times we went about willy-nilly blowing up islands, deserts, ships and living things. [5], [6] And then there are the nuclear accidents that, despite government oversight, were handled so irresponsibly that it forever marred relationship with have with such a promising electrifying technology.

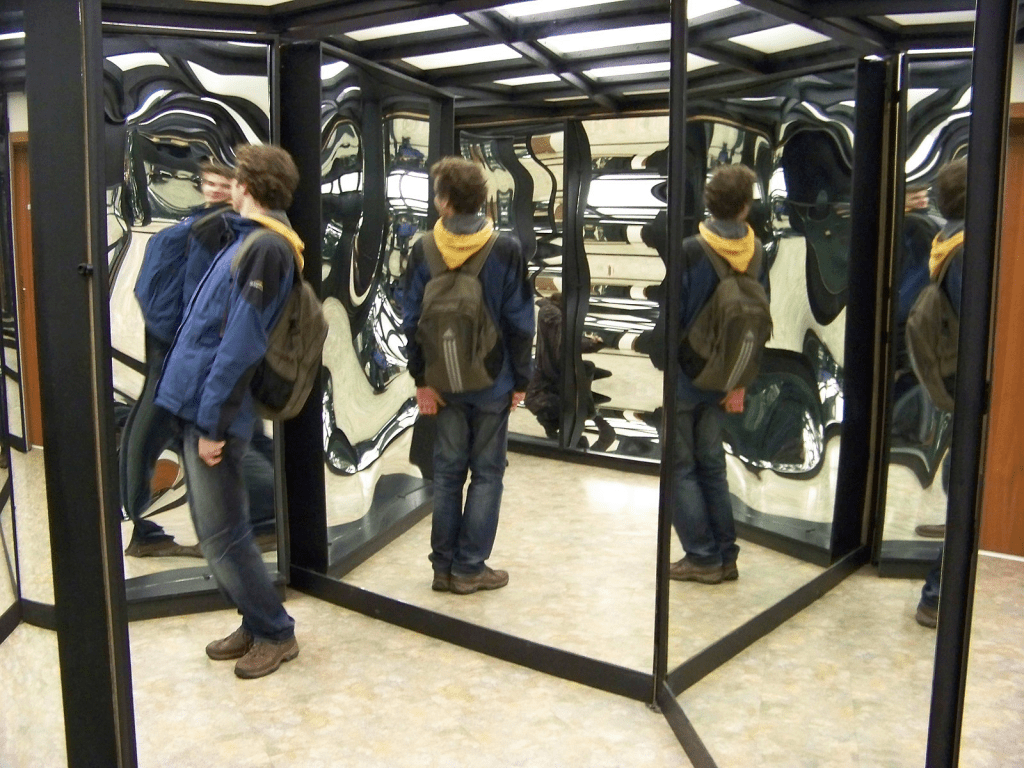

AI House of Mirrors

If you fear AI becoming sentient, don’t. AI is nothing but a mirror. It’ll reflect back what is put in front of it, like a parrot. I cannot be creative anymore than a mirror can make me look more buff when I am in front of it. Creativity requires experience, and artistic expression often comes to us from experience with tightened emotions. AI may look like it can create but the analogy of that creating is carnival mirrors. It can alter/detect things that are similar to what it has seen. Without experience or emotions it can only riff on what’s put in front of it.

But that’s also part of the problem. Put a bunch of “criminal faces” in front of AI to train and the answer to what an American criminal looks like will likely be a black male. Without good ethical humans giving it context, AI will reflect the worst of what humanity has already done. The biggest AI model we could ever build will not ever have the random mixture of neurotransmitters, emotional experiences, and external stimuli that human brains have. Therefore AI cannot imagine a better and more just future for us if we don’t show what that future should look like.

Irresponsible AI will cause damage. It may be temporary but the length of that timeline is unknown right now. A decade? century? How long until we realize we need trained artists/architects/musicians in our lives again? One of the worst longterm outcomes for the future of AI is a population losing trust. AI could manage power grids for efficiency, AI could simulate floods and spare loss of life, AI could give us better answers on how to fix our climate. But fear could destroy this research and cause more unnecessary suffering along the way.

I think we will find that Bad-AI is a lot like a nuclear bomb: it needs humans to proliferate, and grow more damaging. Good-AI, like nuclear energy, has to potential to really change quality of life for the entire world. But people in power only see the world in zero-sum, and for someone to win using AI, they’ll contend, there will need to be losers too.

AI ins’t inherently bad, but AI needs applied ethics more than ever. It’s time us nerds stopped asking if something *could* be built and ask an ethicist if it *should* be built before we start building. It’s hard not to feel a bit of anger regarding this letter, after all, tech giants have been eliminating AI ethics departments everywhere. Without government to put on some breaks on the very real possibility of AI being used to hurt humans, the ones that engineers could ask whether the work they were about to embark would have consequences, are having their jobs eliminated.

We should ask for a moratorium on AI: a moratorium on the use of AI without ethicists. A moratorium for development of AI for use in weapons. A moratorium for AI for use on policing. There are clear places to pause AI until we understand training data and bias better. AI is too good a tool to be shut down and too powerful to use without rails. AI should not cause more suffering today than our society already causes.

If you’d like to understand more about ethical AI space here are a couple of sources. I may add more to this space in the future.

AI has a Burnout Problem – Great article showing the pressure teams and companies are under to deliver AI

DAIR – Timnit Gebru and her team

Sources

[1] “Pause Giant AI Experiments” https://futureoflife.org/open-letter/pause-giant-ai-experiments/

[2]. Szilard Petition https://ahf.nuclearmuseum.org/ahf/key-documents/szilard-petition/

[3] The Dangerous Ideas of “Longtermism” and “Existential Risk https://www.currentaffairs.org/2021/07/the-dangerous-ideas-of-longtermism-and-existential-risk

[4] Against longtermism https://aeon.co/essays/why-longtermism-is-the-worlds-most-dangerous-secular-credo

[5]Bikini Atoll Nuclear Testing https://en.wikipedia.org/wiki/Nuclear_testing_at_Bikini_Atoll

[6] Victims of Nuclear Testing https://www.nti.org/atomic-pulse/downwind-of-trinity-remembering-the-first-victims-of-the-atomic-bomb/

[8] Documentation of datasets and trained models https://cacm.acm.org/magazines/2021/12/256932-datasheets-for-datasets/abstract

[9] AI has a burnout problem https://www.technologyreview.com/2022/10/28/1062332/responsible-ai-has-a-burnout-problem/

Leave a comment